Claude is the conversational assistant developed by Anthropic, a U.S. company specialized in artificial intelligence. Often presented as a direct competitor to ChatGPT, Claude stands out for its focus on safety, ethics, and the reliability of its answers. Since its first version in March 2023, Claude has evolved into a versatile tool used in many sectors, from content creation to data analysis. This comprehensive guide to Claude AI will explain what this assistant is, how it works, its main uses, advantages and limits, as well as future prospects.

What is Claude AI?

Claude is a large language model (LLM) designed to understand and generate natural-language text. Its name pays homage to Claude Shannon, a pioneer of information theory, reflecting Anthropic's desire to build an AI that handles information with rigor and caution.

Like ChatGPT, Claude can hold conversations, write text, analyze documents, and carry out complex tasks. However, Anthropic places particular emphasis on conversational safety, seeking to minimize inappropriate responses, bias, and hallucination risks.

The history and evolution of Claude

The company Anthropic was founded in 2021 by former OpenAI members, including Dario and Daniela Amodei, with the mission of developing AIs that are more transparent, controllable, and reliable. From the outset, Anthropic raised several hundred million dollars, notably from Google, to fund research and training of its models.

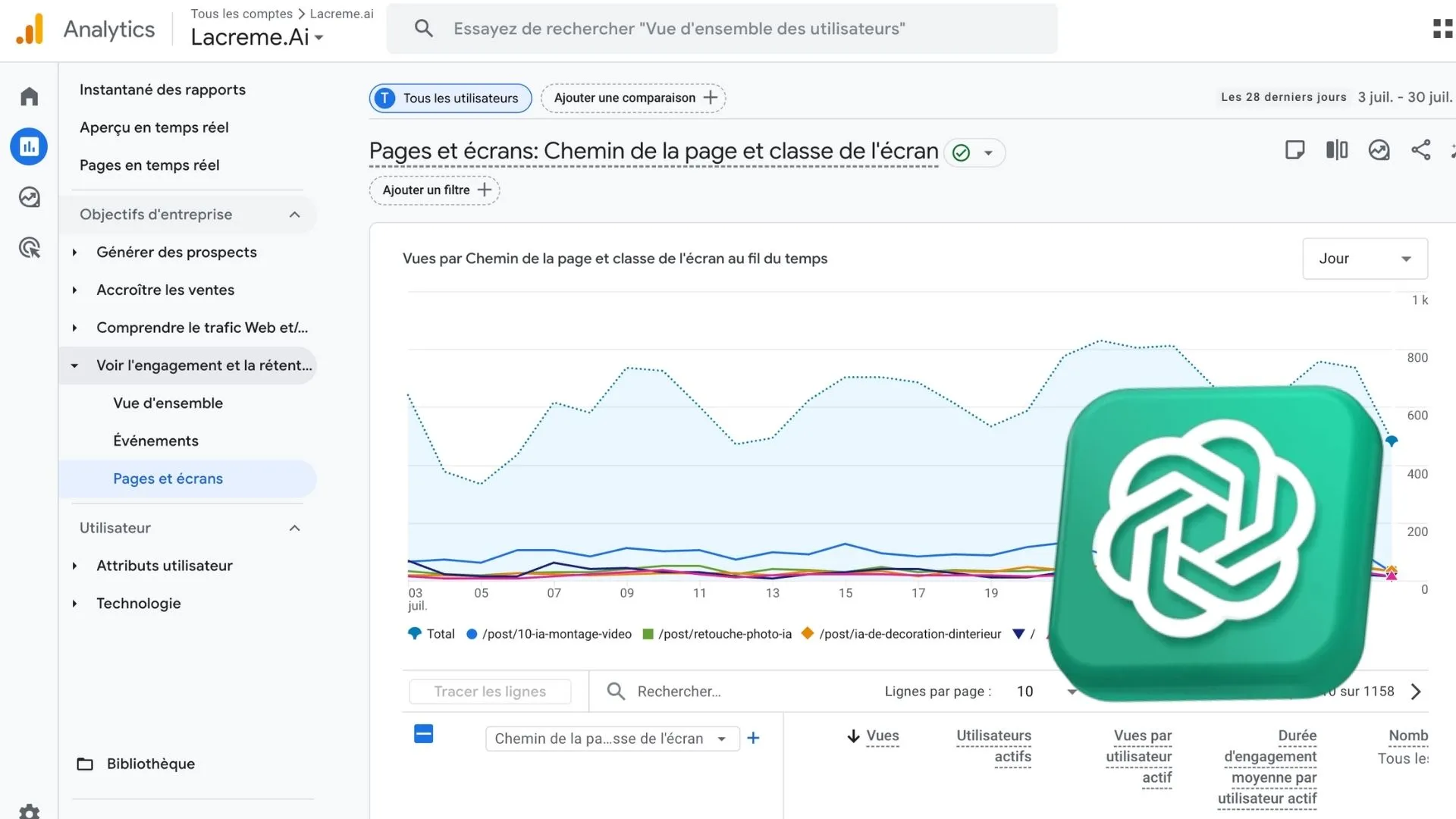

The first public version, Claude 1, was launched in March 2023. A few months later, Claude 2 improved context length, accuracy, and response coherence. In 2024, Claude 3 marked a major advance with the ability to handle up to 200,000 tokens, enabling analysis of long documents or extended conversations without loss of context. In 2025, Anthropic introduces Claude 3.5, which is even faster, more accurate, and has better multimodal skills.

How does Claude AI work?

To understand how Claude works, you need to look at the technology that underpins it. Like other LLMs, Claude relies on a Transformer-based architecture trained on a vast corpus of textual data. Its distinctive feature lies in its training method based on Constitutional AI: an approach where the model learns from a set of predefined ethical principles.

Practically speaking, instead of simply optimizing for the statistical relevance of the answer, Claude AI also takes into account rules of safety, honesty, and respect in its wording. This aims to reduce the risks of hallucinations or inappropriate remarks while maintaining relevance and conversational fluency.

Uses of Claude AI

Claude is used across a wide range of domains. Content writers use it to create articles, scripts, or product descriptions. Companies integrate it into their customer support systems to provide fast, precise answers. Researchers use it to summarize large amounts of data or explore hypotheses.

Its enormous context capacity makes it particularly suitable for analyzing large documents: contracts, financial reports, market studies. In programming, it can generate or fix code, suggest optimized algorithms, and explain technical concepts. For education, it serves as a virtual tutor capable of providing detailed explanations tailored to the learner's level.

Advantages of Claude

One of the major strengths of Claude AI is its ability to maintain a respectful, clear, and factual tone, even on sensitive topics. Its training via Constitutional AI helps reduce the risk of biased or offensive answers. Its very large context window is a considerable advantage for users who need to process long content in one go.

Claude is also recognized for its reliability with complex instructions, making it a valuable tool for professionals seeking precision and consistency. Its speed and ability to produce well-structured text enhance its usefulness in demanding environments.

Limits and precautions

Even though Claude is more cautious than some competitors, it is not without limitations. Like any LLM, it can produce erroneous or inaccurate information. Users should therefore verify important data, especially in domains where accuracy is critical, such as health, law, or finance.

Its excessive caution can sometimes lead to incomplete answers, particularly on topics the model deems sensitive. Moreover, although Anthropic says it limits exposure of user-entered data, privacy remains a concern for companies handling strategic information.

Ethical issues and Anthropic's vision

Anthropic has built its reputation on a responsible approach to artificial intelligence. With Claude AI, the company aims to establish a safe dialogue between humans and machines. This involves rigorous bias management, implementation of safeguards, and transparent communication about the model's capabilities and limits.

This philosophy fits into a global context in which AI regulation is becoming a priority. The AI Act in Europe and regulatory initiatives in the United States are pushing players like Anthropic to strengthen their commitments to safety and privacy.

Recent and future developments

In 2024 and 2025, Claude AI benefited from major updates. Extending its context capacity to 200,000 tokens enabled it to handle entire projects, such as books or complete text databases. New versions improved multilingual handling and nuanced understanding of complex instructions.

Upcoming developments could include more advanced multimodal functions, with image analysis, chart interpretation, and voice generation. Anthropic is also exploring deeper integrations with office suites, CRMs, and development tools.

Claude's impact on the AI market

The arrival of Claude AI has intensified competition in the conversational AI sector, prompting established players like OpenAI and Google to accelerate their innovations. Users thus benefit from models that are more performant, more reliable, and better suited to professional needs.

For businesses, Claude represents a strategic alternative, especially for those that prioritize safety and answer reliability. For individuals, it offers a smooth, respectful conversational experience while remaining capable of handling complex tasks.

Conclusion

Anthropic's Claude stands out as one of the most advanced and safest AI assistants on the market. Its ethical approach, large context capacity, and versatility make it an essential tool for both professionals and individuals.

However, like any generative AI, using it requires discernment and verification of information. The coming years should confirm its major role in an ecosystem where trust and accuracy are becoming essential criteria.

.svg)

.svg)